But if Oak Ridges can use video cards to run applications faster, why does yours go unused when you're not playing games? Because of several software problems, one of which I'll attempt to solve in my master's thesis.

On a computer or smart phone where the video card supports GPGPU, a DirectCompute or OpenCL program can choose to request either the CPU or GPU to run its "kernels" (inner-loop subroutines that can be parallelized). But how is it to make an informed choice when it can't tell which one is busier, or which one has more of the specialized extensions it may need? Only the operating system can make an informed choice. I suspect this is why these frameworks see so little use, and why GPGPU potential tends to go untapped everywhere from smartphones to desktops to supercomputers.

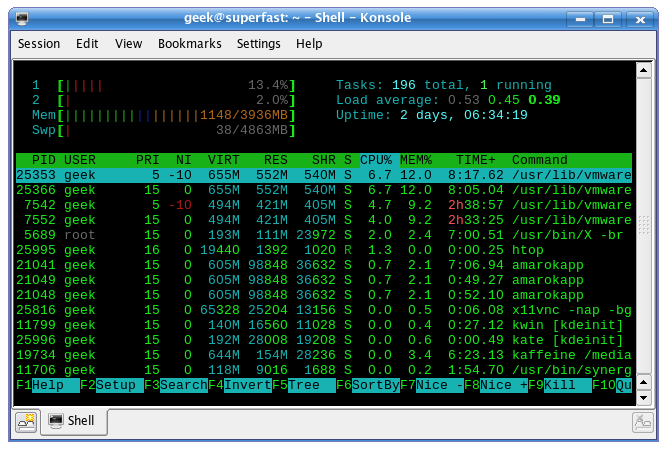

Consider the way a modern operating system's task scheduler works. It assigns processor cores to processes randomly and more or less evenly. As long as the processors are all identical, this makes sense. But if we were to treat the GPU as just another processor, the way OpenCL and DirectCompute would let us if they weren't restricted to the application layer, then suddenly the processors wouldn't all identical. That's why these platforms are implemented on the application layer. The downside is that applications have to be programmed to specifically request a GPU, which most aren't.

My project is to implement a virtual OpenCL computing device that intelligently chooses the most appropriate platform for each thread, taking into account both the need for load balancing and the relative suitability of different platforms for each task. A "virtual device" in this sense is like virtual memory, in that:

- The operating system transparently chooses which virtual devices should map to which types of real processors (just like with virtual memory).

- That choice can be changed at runtime by migrating the device's processes.

- The initial choice is made when the device is first used, rather than when the request is made (at which point we don't yet know what kernel it's going to run).

This device will set itself as the system default. It will replace the physical devices it's encapsulating on the device list. Finally, it'll declare itself as having all their types and capabilities combined. That way, it's fully backward-compatible -- the OpenCL programmer doesn't even have to have heard of my project, nor of any of the particular types of coprocessor the computer has. (The latter is especially important, because a lot of the code being written today will probably someday run on coprocessor types that haven't even been thought of yet.)

I'm thinking of implementing this as an extension to MOSIX VCL. VCL already implements virtual devices that act as switches for distributed computing clusters. All it lacks is the ability to abstract out a system's heterogeneity: it currently creates a separate virtual device for each physical architecture present in the cluster. Not only will extending VCL make my job easier, but it'll also perform better on heterogeneous distributed systems than if my device and VCL were layered together.

Two components on the chart above are worth describing:

- Computational Units: Includes all types of devices that can run OpenCL. This category already includes CPUs and GPUs, and will include FPGAs soon. May eventually include quantum computing, neuromorphic computing, wetware chips, and other paradigms not yet proposed (although these will probably mean major extensions to OpenCL). I expect computers using a Swiss-army-knife configuration of all these different paradigms to dominate the consumer market by the early 2020s, for three reasons:

- Media hype about the potential of new computing paradigms is likely to inspire consumer demand for them, long before they're actually ready to replace conventional CPUs completely.

- Moore's Law may not last through the 2020s. If it doesn't, the only way to continue the current exponential growth in performance will be to adopt new paradigms.

- Even many consumers who've never heard of, let alone used, GPGPU already have GPGPU-capable hardware. The same was true for the 64-bit x86 architecture, as well as several extensions to it such as SSE, so it seems reasonable to expect it to hold for quantum computing as well.

- Performance Predictor: Uses trainable heuristics -- probably a rule induction algorithm -- to estimate a process's future performance on each of the computational units available. Will take into account static code analysis, your computer's hardware specs, and observations of past performance of the same kernel. (Kernels rarely use branching paths, unpredictable while-loops, or recursion. The performance analysis is thus much simpler in practice than in theory.)

Ideally, this scheduler should generalize to other computing paradigms such as quantum, FPGA and wetware computing, once those become widely available (although OpenCL will have to be heavily extended before it can support them). From what I've seen, these paradigms all offer even greater performance gains, but in even narrower ranges of applications, so they're even more at risk of being underutilized.

I envision that future computers will be increasingly heterogeneous. Whether it's a smartphone, a workstation or an enterprise cloud-computing data center, the general-purpose computer of the future will probably use a Swiss army knife of coprocessors. Enthusiasts and cloud-computing providers will mix-and-match different CPU architectures and vendors. But unlike a Swiss army knife, it will be using all of its tools at once, and the task scheduler will have to choose the right tools for the right jobs.

OpenCL's widespread support (including the upcoming compiler from Altera for its FPGAs) and extensibility make me optimistic about its potential to keep programming manageable, and VCL strikes me as a good platform to build my project on.

No comments:

Post a Comment